“As AI advances, it’s important to balance public safety with our democratic freedoms,” says Microsoft President Brad Smith.

What if the U.S. Immigration and Customs Enforcement agency had used facial recognition technology to help separate children from their parents at the border?

If that had happened, who would be morally culpable, and might that not only include the White House, which implemented and then rescinded its controversial “zero tolerance” policy last month after a federal judge ruled that it was unconstitutional? Indeed, shouldn’t the organization that supplied the artificial intelligence and biometrics technology also be held responsible?

These are some of the questions being raised in the wake of reports that police and government agencies are increasingly gaining access to facial recognition tools that allow them to identify individuals more quickly and in more contexts – not just from mug shots, but also on the street, in crowds and from unmanned aerial vehicles.

As questionable uses or potential applications of the technology have surfaced, employees at Amazon, Google, Microsoft and Salesforce have fired ethical broadsides at senior management, urging them to clearly articulate how their facial biometrics technology is being used and to commit to ensuring that such use never violates people’s human rights.

Calls for Regulation

On Friday, Microsoft President Brad Smith weighed in, saying that as facial recognition technology continues to rapidly improve, it’s imperative that society agree on clear guidelines for how and when it can be used. In particular, he called on Congress to create a bipartisan commission designed to create new laws to regulate the use of biometrics regulation in the United States.

“This should build on recent work by academics and in the public and private sectors to assess these issues and to develop clearer ethical principles for this technology,” Smith said in a blog post.

“The purpose of such a commission should include advice to Congress on what types of new laws and regulations are needed, as well as stronger practices to ensure proper congressional oversight of this technology across the executive branch,” Smith said.

Cloud Platforms Offer Facial Recognition

Already, facial recognition capabilities have been built into cloud-based platforms offered by the likes of Affectiva, Amazon, Google, IBM, Kairos, Microsoft, NEC and OpenCV and Salesforce. Proponents laud it in part for its potential to stop terrorists and kidnappers and to spot fugitives.

“These issues are not going to go away.”

—Microsoft’s Brad Smith

But Alan Woodward, a computer science professor at the University of Surrey, tells Information Security Media Group that facial recognition is a slippery slope, and that lawmakers need to put regulations governing its use into place as quickly as possible (see Facial Recognition: Big Trouble With Big Data Biometrics).

“History has taught us that data gathered – which now can include biometric data – is often misused,” he says. “This needs to be considered and regulated very heavily otherwise we really will end up living in 1984 without knowing it.”

Microsoft Employees Criticize ICE Contract

Concerns over the use of facial recognition technology in a potentially inhumane manner are already far from academic.

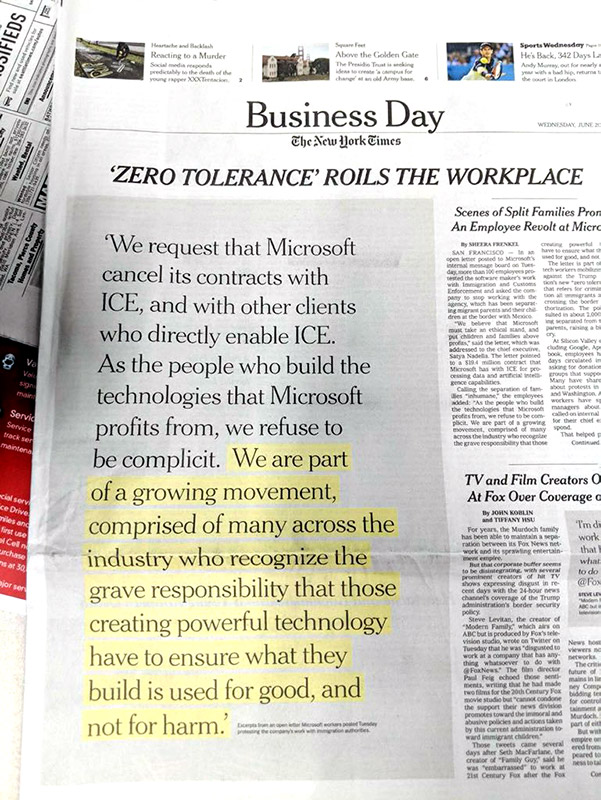

Last month, more than 100 Microsoft employees posted an open letter to Microsoft CEO Satya Nadella, pointing to a $19.4 million contract the company has with ICE to provide artificial intelligence capabilities and calling on the company to cancel the contract.

The employees’ ire was sparked in part by a Microsoft marketing blog, published in January, which said that ICE’s contract with Microsoft for Azure Government cloud computing capabilities would enable immigration authorities to “process data on edge devices or utilize deep learning capabilities to accelerate facial recognition and identification.”

“We are part of a growing movement, comprised of many across the industry who recognize the grave responsibility that those creating powerful technology have to ensure what they build is used for good, and not for harm,” the employees wrote in the wake of news reports relaying images of immigrant children who had been separated from their parents and housed in what looked like cages inside detention facilities.

The employees called on Microsoft to cancel the ICE contract, publicly commit to never violating international human rights law as well as “commit to transparency and review regarding contracts between Microsoft and government agencies, in the U.S. and beyond.”

Labeling ICE as “an agency that has shown repeated willingness to enact inhumane and cruel policies,” the employees wrote that they had zero tolerance for anyone who might abuse their technology to violate people’s rights.

“We refuse to be complicit,” they said.

Where to Draw the Line?

Microsoft’s president responded specifically to those allegations in his blog post, first touching on Microsoft’s work with ICE, a law enforcement agency that is part of the U.S. Department of Homeland Security. “We’ve since confirmed that the contract in question isn’t being used for facial recognition at all. Nor has Microsoft worked with the U.S. government on any projects related to separating children from their families at the border, a practice to which we’ve strongly objected,” Smith said.

Instead, the contract involves supporting the agency’s “legacy email, calendar, messaging and document management workloads,” Smith said.

But at what point should an organization put down its foot with a federal agency operating in a manner to which at least some of its employees object?

“This type of IT work goes on in every government agency in the United States, and for that matter virtually every government, business and nonprofit institution in the world,” Smith said. “Some nonetheless suggested that Microsoft cancel the contract and cease all work with ICE.”

Answering these questions – via clear, legal frameworks that govern the use of such technology – are imperative, Smith said, noting: “These issues are not going to go away.”

Indeed, Woodward warns that biometrics technology used in an acceptible manner today could still be abused in the future. “Circumstances change, governments change and what is illegal today – and for which you might be happy for your biometrics to be used for screening – may change tomorrow,” he says. “What is a political rally today might be reclassified as a riot in future and suddenly you find your rights being infringed.”

Privacy rights groups are already sounding alarms. In May, the American Civil Liberties Union and 35 other organizations – including the Electronic Frontier Foundation and Muslim Justice League – slammed Amazon for “marketing Rekognition for government surveillance” and called on Amazon CEO Jeff Bezos to cease offering the service to governments (see Amazon Rekognition Stokes Surveillance State Fears).

Google and Salesforce Employees Urge Action

Employees at firms that provide such technology – beyond Microsoft – have also been calling on senior executives to behave in a more transparent and ethically demonstrative manner.

“History has taught us that data gathered – which now can include biometric data – is often misused.”

—Alan Woodward, University of Surrey

Last month, a dozen Google employees resigned over Project Maven, a Pentagon effort to use artificial intelligence to support drone warfare imaging. Google subsequently to not renew the contract, which expires in 2019.

Also last month, more than 650 employees of Salesforce signed a letter to CEO Marc Benioff, calling on him to reevaluate the company’s Service Cloud contract with the U.S. Customs and Border Protection, which is the country’s border agency.

“Given the inhumane separation of children from their parents currently taking place at the border, we believe that our core value of Equality is at stake and that Salesforce should reexamine our contractual relationship with CBP and speak out against its practices,” the employees wrote in their letter, which was obtained by BuzzFeed News.

Salesforce should also “craft a plan for examining the use of all our products, and the extent to which they are being used for harm,” the employees wrote.

They also called on the company to take a clear stand against “destructive” Trump administration policies and to ensure no Salesforce technology be allowed to support them.

“We recognize the explicit policy of separating children at the border has been stopped, but that simply returns us to a status quo of detaining children with their parents at the border,” the employees wrote. “We believe it is vital for Salesforce to stand up against both the practice that inspired this letter and any future attempts to merely make this destructive state of affairs more palatable.”

Amazon Employees to Bezos: Learn From History

Some Amazon employees also responded to the forced child and parent separations at the U.S. border by demanding last month Bezos restrict the use of the company’s Rekognition facial recognition technology.

“We refuse to build the platform that powers ICE, and we refuse to contribute to tools that violate human rights,” a group of Amazon employees wrote last month in a letter to Amazon CEO Jeff Bezos, obtained by The Hill. “As ethically concerned Amazonians, we demand a choice in what we build, and a say in how it is used.”

History has shown that new technologies can be abused in unexpected ways, they too warned.

“We learn from history, and we understand how IBM’s systems were employed in the 1940s to help Hitler,” the Amazon employees wrote in their letter, citing an investigative report into how IBM sold technology to Nazi Germany’s statistical offices and census departments.

“IBM did not take responsibility then, and by the time their role was understood, it was too late,” they wrote

Fonte: databreachtoday.com

[button link=”https://cryptoid.com.br/category/biometria-2/” icon=”fa-magic” side=”left” target=”” color=”c5c0cf” textcolor=”ffffff”]Leia outros artigos sobre Biometria aqui![/button][button link=”https://cryptoid.com.br/category/international-news/” icon=”fa-globe” side=”left” target=”” color=”c5c0cf” textcolor=”ffffff”]Internacional News[/button]

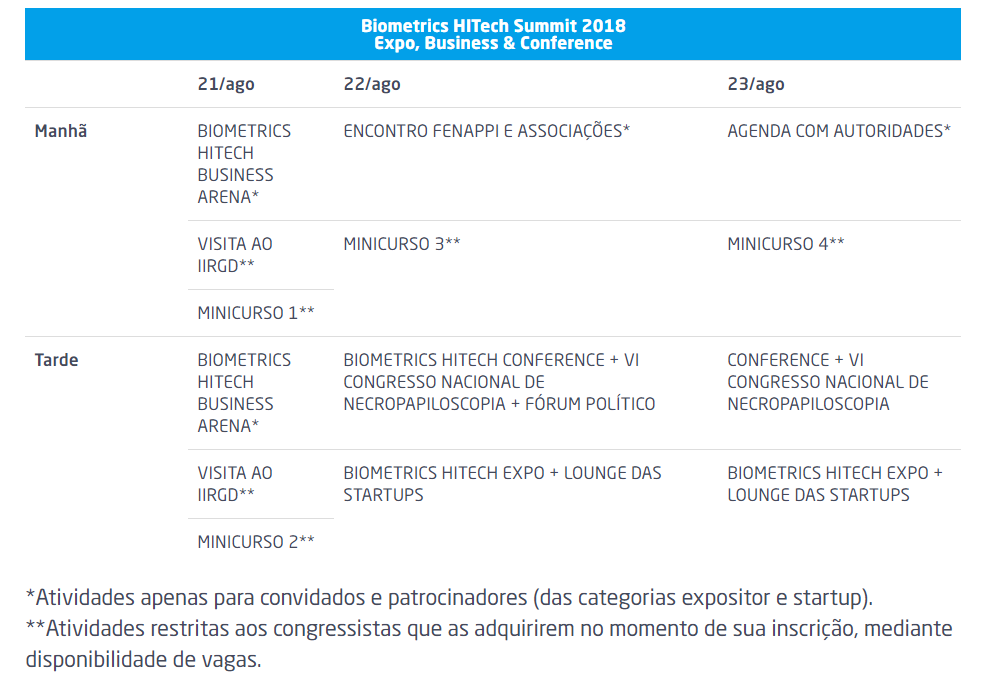

Em 2018, o Biometrics HITech Summit – Expo, Business and Conference, único evento do Brasil especializado em biometrias e tecnologias de identificação, chega a sua quarta edição e será realizado de 21 a 23 de agosto, no Centro FECOMERCIO de Eventos, em São Paulo – SP

Em 2018, o Biometrics HITech Summit – Expo, Business and Conference, único evento do Brasil especializado em biometrias e tecnologias de identificação, chega a sua quarta edição e será realizado de 21 a 23 de agosto, no Centro FECOMERCIO de Eventos, em São Paulo – SP